LLMs Comparison: Top Models, Companies, and Use Cases

Read about LLM comparisons with rankings, top LLM companies, key use cases, and how B2B teams choose models for GTM, analytics, and automation.

I’ve lost count of how many B2B meetings I’ve sat in where someone confidently says:

“We should just plug an LLM into this.”

This usually happens right after:

- someone pulls up a dashboard no one fully trusts

- attribution turns into a philosophical debate

- sales says marketing insights are “interesting” but not usable

The assumption is always the same.

LLMs are powerful, advanced AI models, so surely they can ✨magically✨ fix decision-making.

They cannot.

What they can do very well is spot patterns, compress complexity, and help humans think more clearly. What they are terrible at is navigating the beautiful chaos of B2B reality, where context is scattered across tools, teams, timelines, and the occasional spreadsheet someone refuses to let go of.

That disconnect is exactly why most LLM comparison articles feel slightly off. They obsess over which model is smartest in isolation, instead of asking a far more useful question: which model actually survives production inside a B2B stack?

This guide is written for people choosing LLMs for:

- GTM analytics

- marketing and sales automation

- attribution and funnel analysis

- internal decision support

It is a B2B-first LLM comparison, grounded in how teams actually use these models once the meeting ends and real work begins.

What is a Large Language Model (LLM)?

An LLM, or large language model, is a system trained to understand and generate language by learning patterns from large volumes of text… specifically, vast amounts of text data. Access to this extensive text data is crucial for enabling LLMs to develop advanced language capabilities.

That definition is accurate and also completely useless for business readers like you (and me).

So, let me give you the version that’s actually helpful.

An LLM is a reasoning layer that can take unstructured inputs and turn them into structured outputs that humans can act on.

You give it things like:

- questions

- instructions

- documents

- summaries of data

- internal notes that are not as clear as they should be

It gives you:

- explanations

- summaries

- classifications

- recommendations

- drafts

- analysis that looks like thinking

For B2B teams, this matters because most business problems are not data shortages. They are interpretation problems. The data exists, but no one has the time or patience to connect the dots across systems.

Why the LLM conversation changed for business teams

A while ago, the discussion around LLMs revolved around intelligence. Everyone wanted to know which model could reason better, write better, answer trickier questions, and code really really well.

Now… that phase passed quickly. This shift in conversation has been enabled by ongoing advancements in AI research, which continue to drive improvements in large language models and their practical applications.

Once LLMs moved from demos into daily workflows, new questions took over (obviously):

- Can this model work reliably inside our systems?

- Can we control what data it sees?

- Can legal and security sign off on it?

- Can finance predict what it will cost when usage grows?

- Can teams trust the outputs enough to act on them?

This shift changed how LLM rankings should be read. Raw intelligence stopped being the main deciding factor. Operational fit started to matter more.

The problem (most) B2B teams run into

Here’s something I’ve seen repeatedly. Most LLM failures in B2B are NOT because of the LLMs they use.

They are context failures.

Let’s see how… your CRM has partial data. Your ad platforms tell a different story. Product usage lives somewhere else. Revenue data arrives late. Customer conversations are scattered across tools. When an LLM is dropped into this whole situation, it does exactly what it is designed to do. It fills gaps with confident language.

That is why teams say things like:

- “The insight sounded right but was not actionable”

- “The summary missed what actually mattered”

- “The recommendation did not match how we run our funnel”

Look… the model was not broken, but the inputs sure were incomplete.

Understanding this is critical before you compare types of LLM, evaluate top LLM companies, or decide where to use these models inside your stack.

LLMs amplify whatever system you already have. If your data is clean and connected, they become powerful decision aid. If your context is fragmented, they become very articulate guessers.

Integrating external knowledge sources can mitigate context failures by providing LLMs with more complete information.

That framing will matter throughout this guide.

Types of LLMs you’ll see…

Most explanations for ‘types of LLM’ sound like they were written for machine learning engineers. That is not helpful when you are a marketer, revenue leader, or someone who prefers normal English… trying to choose tools that will actually work within your stack.

This section breaks down LLMs by how B2B teams actually encounter them in practice. Many of these are considered foundation models because they serve as the base for a wide range of applications, enabling scalable and robust AI systems.

- General-purpose LLMs

These are the models most people meet first. They are designed to handle a wide range of tasks without deep specialization.

In practice, B2B teams use them for:

- Drafting emails and content

- Summarizing long documents

- Answering ad hoc questions

- Structuring ideas and plans

- Basic analysis and explanations

They are flexible and easy to start with. That is why they show up in almost every early LLM comparison.

The trade-off becomes apparent when teams try to scale usage. Without strong guardrails and context, outputs can vary across users and teams. One person gets a great answer… another gets something vague… and consistency becomes the biggest problem.

General-purpose models work best when they sit behind structured workflows rather than free-form chat windows.

- Domain-tuned LLMs

Domain-tuned LLMs are optimized for specific industries or functions. Instead of trying to be good at everything, they focus on narrower problem spaces.

Common domains include:

- Finance and risk

- Healthcare and life sciences

- Legal and compliance

- Enterprise sales and GTM workflows

B2B teams turn to these models when accuracy and terminology matter more than creativity. For example, a Sales Ops team analyzing pipeline stages does not want flowery language; they want outputs that match how their business actually runs.

The limitation is flexibility. These models perform well inside their lane, but they can feel rigid when asked to step outside it. They also depend heavily on how well the domain knowledge is maintained over time.

- Multimodal LLMs

Multimodal LLMs can process data beyond just text. Depending on the setup, they can process images, charts, audio, and documents alongside written input.

This shows up in places like:

- Reviewing slide decks and dashboards

- Analyzing screenshots from tools

- Summarizing call recordings

- Extracting insights from PDFs and reports

This category matters more than many teams expect. Real business data is rarely clean text. It lives in decks, spreadsheets, recordings, and screenshots shared over chat.

Multimodal models reduce the friction of converting all that into text before analysis. The tradeoff is complexity. These models require more careful setup and testing to ensure outputs stay grounded.

- Embedded LLMs inside tools

This is the category most teams end up using the most, even if they do not think of it as ‘choosing’ an LLM.

You don’t go out and buy a ‘model’, you use:

- A CRM with AI assistance

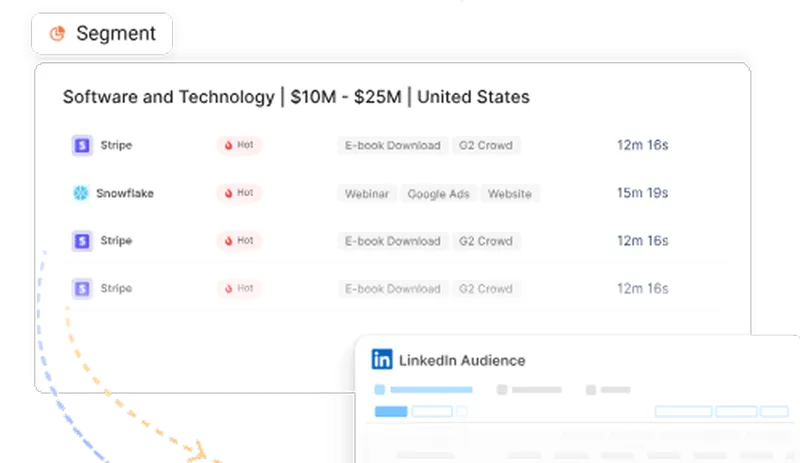

- An analytics platform with AI insights

- A GTM tool with built-in agents

- A support system with automated summaries

Here, the LLM is embedded inside a product that already controls:

- Data access

- Permissions

- Workflows

- Context

For B2B teams, this often delivers the fastest value. The model already knows where to look and what rules to follow. The downside is reduced visibility into which model is used and how it is configured.

P.S.: This is also why many companies do not realize they are consuming multiple LLMs at the same time through different tools.

- Open-source vs proprietary LLMs

This distinction cuts across all the categories above.

Open-source LLMs give teams more control over deployment, tuning, and data governance. They appeal to organizations with strong engineering teams and strict compliance needs.

Proprietary LLMs offer managed performance, easier onboarding, and faster iteration. They appeal to teams that want results without owning infrastructure.

Most mature teams end up with a mix… they might use proprietary models for speed and open-source models where control matters more. I will break down this decision later in the guide.

Understanding these categories makes the rest of this LLM comparison easier. When people ask which model is best, the only answer is that It ALL depends on which type they actually need.

How we’re comparing LLMs in this guide

If you read a few LLM ranking posts back to back, you will notice a pattern. Most of them assume the reader is an individual user chatting with a model in a blank window.

That assumption breaks down completely in B2B.

When LLMs move into production, they stop being toys and start behaving like infrastructure. They touch customer data, influence decisions, and sit inside workflows that multiple teams rely on. That changes how they should be evaluated.

So before we get into LLM rankings, it is important to be explicit about how this comparison works and what it is designed to help you decide.

This evaluation focuses explicitly on each model's advanced capabilities, including its ability to handle complex tasks and meet sophisticated business requirements.

- Reasoning and output quality

The first thing most teams test is whether a model sounds smart. That is necessary, but it’s not enough.

For business use, output quality shows up in quieter ways:

- Does the model follow instructions consistently?

- Can it handle multi-step reasoning without drifting?

- Does it stay aligned to the same logic across repeated runs?

- Can it work with structured inputs like tables, stages, or schemas?

In GTM and analytics workflows, consistency matters more than clever phrasing. A model that gives slightly less polished language but a predictable structure is usually easier to operationalize.

- Data privacy and compliance readiness

This is where many promising pilots quietly die.

B2B teams need clarity on:

- How data is stored

- How long it is retained

- Whether it is used for training

- Who can access outputs

- How permissions are enforced

Models that work fine for individual use often stall here. Legal and security teams do not want assurances. They want documented controls and clear answers.

In real LLM comparisons, this criterion quickly narrows the shortlist.

- Integration and API flexibility

Most serious LLM use cases do not live in a chat window.

They live inside:

- CRMs

- Data warehouses

- Ad platforms

- Analytics tools

- Internal dashboards

That makes integration quality critical. B2B teams care about:

- Stable APIs

- Function calling or structured outputs

- Support for agent workflows

- Ease of connecting to existing systems

A model that cannot integrate cleanly becomes a bottleneck, no matter how strong it looks in isolation.

- Cost predictability at scale

Almost every LLM looks affordable in a demo.

Things change when:

- Usage becomes daily

- Multiple teams rely on it

- Automation runs continuously

- Data volumes increase

For B2B teams, cost predictability matters more than headline pricing. Finance teams want to know what happens when usage doubles or triples. Product and ops teams want to avoid sudden spikes that force them to throttle workflows.

This is why cost shows up as a first-class factor in this LLM comparison, not an afterthought.

- Enterprise adoption and ecosystem

Some LLM companies are building entire ecosystems around their models. Others focus narrowly on model research or open distribution.

Ecosystem strength affects:

- How easy it is to hire talent

- How quickly teams can experiment

- How stable tooling feels over time

- How much community knowledge exists

For B2B teams, this often matters more than raw model capability. A slightly weaker model with strong tooling and adoption can outperform a technically superior one in production.

- Suitability for analytics, automation, and decision-making

This is the filter that matters most for this guide.

Many models can write. Fewer models can:

- Interpret business signals

- Explain how they arrived at a recommendation

- Support repeatable decision workflows

- Work reliably with imperfect real-world data

Since this guide focuses on LLM use cases tied to GTM and analytics, models are evaluated on how well they support reasoning that leads to action, not just answers that sound good.

Large Language Models Rankings: Top LLM Models

Before we get into specific models, one thing needs to be said clearly.

There is no single best LLM for every B2B team.

Every LLM comparison eventually lands at this exact point. What matters is how a model behaves once it is exposed to real data, real workflows, real users, and real constraints. The rankings below are based on how these powerful models perform across analytics, automation, and decision-making use cases, not how impressive they look in isolation. Each company's flagship model is evaluated for its strengths, versatility, and suitability for complex business tasks.

Note: Think of this as a practical map, not a trophy list.

- GPT models (GPT-4.x, GPT-4o, and newer tiers)

Best at:

Structured reasoning, instruction following, agent workflows

Why B2B teams use it:

GPT models are often the easiest starting point for production-grade workflows. They handle complex instructions well, follow schemas reliably, and adapt across a wide range of tasks without breaking. For GTM analytics, pipeline summaries, account research, and workflow automation, this reliability matters.

Next, GPT-4o, one of the most advanced LLMs and a widely used model, is available via the API and ChatGPT, offering strong multimodal capabilities and serving as OpenAI's flagship model.

I’ve seen teams trust GPT-based systems for recurring analysis because outputs remain consistent across runs. That makes it easier to build downstream processes that depend on the model behaving predictably.

Where it struggles:

Costs can scale quickly once usage becomes embedded across teams. Without strong context control, outputs can still sound confident while missing internal nuances. This model performs best when wrapped inside systems that tightly manage inputs and permissions.

- Claude models (Claude 3.x and above)

Best at:

Long-context understanding, careful reasoning, document-heavy tasks

Why B2B teams use it:

Claude shines when the input itself is complex. Long internal documents, policies, contracts, and knowledge bases are handled with clarity. Teams that care about document analysis make it a preferred choice for teams needing thoughtful summaries and clear explanations for internal decision support and enablement.

Its tone tends to be measured, which helps in environments where explainability and caution are valued.

Where it struggles:

In automation-heavy GTM workflows, Claude can feel slower to adapt. It sometimes requires more explicit instruction to handle highly structured logic or aggressive agent behavior. For teams pushing high-volume automation, this becomes noticeable.

- Gemini models (Gemini 1.5 and newer)

Best at:

Multimodal reasoning and ecosystem-level integration

Why B2B teams use it:

Gemini performs well when text needs to interact with charts, images, or documents.

Its ability to handle multimodal tasks makes it helpful in reviewing dashboards, analyzing slides, and working with mixed-media inputs. Teams already invested in the Google ecosystem often benefit from smoother integration and deployment.

For analytics workflows that include visual context, this is a meaningful advantage.

Where it struggles:

Outside tightly integrated environments, setup and tuning can require more effort. Output quality can vary unless prompts are carefully structured. Teams that rely on consistent schema-driven outputs may need additional validation layers.

- Llama models (Llama 3 and newer)

Best at:

Controlled deployment and customization

Why B2B teams use it:

Llama models appeal to organizations that want ownership. Being open-source, they can be deployed internally, fine-tuned for specific workflows, and governed according to strict compliance requirements. These highly customizable models allow teams to adapt the LLM to their unique needs and industries. For teams with strong engineering capabilities, this control is valuable.

In regulated environments, this flexibility often outweighs raw performance differences.

Where it struggles:

Out-of-the-box performance may lag behind proprietary models for complex reasoning tasks. The real gains appear only after investment in tuning, infrastructure, and monitoring. Without that, results can feel inconsistent.

- Mistral models

Best at:

Efficiency and strong performance relative to size

Why B2B teams use it:

Mistral has built a reputation for delivering capable models that balance performance and efficiency. For teams experimenting with open deployment or cost-sensitive automation, this balance matters. Mistral models often achieve strong results compared to larger models, offering efficiency without the overhead of extensive models.

Where it struggles:

Ecosystem maturity is still evolving. Compared to larger top LLM companies, tooling, documentation, and enterprise support may feel lighter, which affects rollout speed for larger teams.

- Cohere Command

Best at:

Enterprise-focused language understanding

Why B2B teams use it:

Cohere positions itself clearly around enterprise needs. Command models are often used in analytics, search, and internal knowledge workflows where clarity, governance, and stability matter. Teams building decision support systems appreciate the emphasis on business-friendly deployment.

Where it struggles:

It may not match the creative or general flexibility of broader models. For teams expecting one model to do everything, this can feel limiting.

- Domain-specific enterprise models

Best at:

Narrow, high-stakes workflows

Why B2B teams use them:

Some vendors build models specifically tuned for finance, healthcare, legal, or enterprise GTM. These models excel where accuracy and domain alignment are more important than breadth. In certain workflows, they outperform general-purpose models simply because they speak the same language as the business.

Where they struggle:

They are rarely flexible. Using them outside their intended scope often leads to poor results. They also depend heavily on the quality of the underlying domain knowledge.

Top LLM Companies to Watch

When people talk about LLM adoption, they often frame it as a model decision. In practice, B2B teams are also choosing a company strategy.

Some vendors are building horizontal platforms. Some are going deep into enterprise workflows. Others are shaping ecosystems around open models and engaging with the open source community. Understanding this helps explain why two teams using ‘LLMs’ can have wildly different experiences.

Below, I’ve grouped LLM companies by how they approach the market, (not by hype or popularity).

Platform giants you know already (but let’s get to know them better)

These companies focus on building general-purpose models with broad applicability, then surrounding them with infrastructure, tooling, AI tools and ecosystems.

- OpenAI

OpenAI’s strength lies in building models that generalize well across tasks. Many B2B teams start here because the models are adaptable and the tooling ecosystem is mature. You will often see OpenAI models embedded inside analytics platforms, GTM tools, and internal systems rather than used directly.

OpenAI also provides APIs and AI tools that enable the development of generative AI applications across industries. - Google

Google’s approach leans heavily into integration. For teams already using Google Cloud, Workspace, or related infrastructure, this can reduce friction. Their focus on multimodal capabilities also makes them relevant for analytics workflows that involve charts, documents, and visual context.

Google offers AI tools like the PaLM API, which support building generative AI applications for content creation, chatbots, and more. - Anthropic

Positions itself around reliability and responsible deployment. Their models are often chosen by teams that prioritize long-context reasoning and careful outputs, in enterprise environments where trust and explainability matter, this positioning resonates.

Like other major players, Anthropic invests in developing its own LLMs for both internal and external use.

These companies tend to set the pace for the broader ecosystem. Even when teams do not use their models directly, many tools and generative AI applications are built on top of them.

Enterprise-first AI companies

Some vendors focus less on general intelligence and more on how LLMs behave inside business systems.

- Cohere

Cohere has consistently leaned into enterprise use cases like search, analytics, and internal knowledge systems. Their messaging and product design are oriented toward teams that want LLMs to feel like dependable infrastructure rather than experimental tech.

Enterprise-first AI companies often provide custom machine learning models tailored to specific business needs, enabling organizations to address unique natural language processing challenges.

This category matters because enterprise adoption is rarely about novelty. It is about governance, stability, and long-term usability.

Open-source leaders

Open-source LLMs shape a different kind of adoption curve. They give teams control, at the cost of convenience.

- Meta

Meta’s Llama models have become a foundation for many internal deployments. Companies that want to host models themselves, fine-tune them, or tightly control data flows often start here. Open-source Llama models provide access to the model weights, allowing teams to re-train, customize, and deploy the models on their own infrastructure. - Mistral AI

The Mistral ecosystem has gained attention for efficient, high-quality open models. These are often chosen by teams that want strong performance without committing to fully managed platforms. Mistral’s open models also provide model weights, giving users full control for training and deployment.

Some open-source models, such as Google’s Gemma, are built on the same research as their proprietary counterparts (like Gemini), sharing the same foundational technology and scientific basis.

Open-source leaders rarely win on ease of use. They win on flexibility. For B2B teams with engineering depth, that tradeoff can be worth it.

Vertical AI companies building LLM-powered systems

A growing number of companies are not selling models at all. They are selling systems.

These vendors build solutions tailored for various industries, such as:

- sales intelligence platforms

- marketing analytics tools

- support automation systems

- financial analysis products

LLMs sit inside these tools as a reasoning layer, but customers never interact with the model directly. This is where many B2B teams actually use LLMs day-to-day.

It is also why comparing top LLM companies purely at the model level can be misleading. The value often derives from how well the model is implemented within a product.

A reality check for B2B buyers

Most B2B teams do not wake up and decide to ‘buy an LLM.’

They buy:

- A GTM platform

- An analytics tool

- A CRM add-on

- A support system

A key factor B2B buyers consider is seamless integration with their existing platforms, ensuring new tools work efficiently within their current workflows.

And those tools make LLM choices on their behalf.

Understanding which companies power your stack helps you ask better questions about reliability, data flow, and long-term fit. It also explains why two teams using different tools can produce very different outcomes, even if their underlying models appear similar.

LLM use cases that matter for B2B teams

If you look at how LLMs are marketed, you would think their main job is writing content faster.

That is rarely why serious B2B teams adopt them.

In real GTM and analytics environments, LLMs are used when human attention is expensive, and context is distributed. Beyond content generation, LLMs are also used for a range of natural language processing tasks, including text generation, question answering, translation, and classification. The value shows up when they help teams see patterns, reduce manual work, and make better decisions with the data they already have.

Below are the LLM use cases that consistently matter in B2B, especially once teams move past experimentation.

- GTM analytics and signal interpretation

This is one of the most underestimated use cases.

Modern GTM teams are flooded with signals:

- Website visits

- Ad engagement

- CRM activity

- Pipeline movement

- Product usage

- Intent data

The problem is with interpretation (not volume).

LLMs help by:

- Summarizing account activity across channels

- Explaining why a spike or drop happened

- Grouping signals into meaningful themes

- Translating raw data into plain-language insights

- Enabling semantic search to improve information retrieval and understanding from large sets of GTM signals

I’ve often seen teams spend hours debating dashboards when an LLM-assisted summary could have surfaced the core insight in minutes. The catch is context. Without access to clean, connected signals, the explanation quickly becomes generic.

- Sales and marketing automation

This is where LLMs save you lots of time (trust me).

Instead of hard-coded rules, teams use LLMs to:

- Draft outreach based on account context

- Customize messaging using recent activity

- Summarize sales calls and hand off next steps

- Prioritize accounts based on narrative signals, not just scores

- Assist with coding tasks such as automating scripts or workflows

Generating text for outreach and communication is a core function of LLMs in sales and marketing automation, enabling teams to produce coherent, contextually relevant content for various applications.

The strongest results appear when automation is constrained. Free-form generation looks impressive in demos but breaks down at scale. LLMs perform best when they work inside structured workflows with clear boundaries.

- Attribution and funnel analysis

Attribution is one of those things everyone cares about, but no one fully trusts.

LLMs help by:

- Explaining how different touchpoints influenced outcomes

- Summarizing funnel movement in human language

- Identifying patterns across cohorts or segments

- Answering ad hoc questions without pulling a new report

Note: This does NOT replace quantitative models… it complements them. Teams still need defined attribution logic. LLMs make the outputs understandable and usable across marketing, sales, and leadership.

- Customer intelligence and segmentation

Customer data lives across tools that refuse to talk to each other. LLMs step in as the stitching layer that brings everyone into the same conversation.

Common use cases include:

- Summarizing account histories

- Identifying common traits among high-performing customers

- Grouping accounts by behavior rather than static fields

- Surfacing early churn or expansion signals

- Performing document analysis to extract insights from customer records

This is especially powerful when paired with first-party data. Behavioral signals provide the model with real data to reason about, rather than relying on assumptions.

- Internal knowledge search and decision support

Ask any B2B team where knowledge lives, and you will get a nervous laugh. Policies, playbooks, decks, and documentation exist, but finding the right answer at the right time is painful.

LLMs help by:

- Answering questions grounded in internal documents

- Summarizing long internal threads

- Guiding new hires through existing knowledge

- Supporting leaders with quick, contextual explanations

Retrieval augmented generation techniques can further improve the accuracy and relevance of answers by enabling LLMs to access and incorporate information from external data sources, such as internal knowledge bases.

This use case tends to gain trust faster because the outputs can be traced back to known sources.

Open-Source vs Closed LLMs: What should you choose?

This question shows up in almost every LLM conversation…

“Should we use an open-source LLM or a closed, proprietary one?”

There is no universal right answer here. What matters is how much control you need, how fast you want to move, and how much operational responsibility your team can realistically handle.

Open-source LLMs offer greater control for developers and businesses, particularly for deployment, customization, and handling sensitive data. They can also be fine-tuned to meet specific business needs or specialized tasks, providing flexibility that closed models may not offer.

Here’s what open-source models offer

Open-source LLMs appeal to teams that want ownership.

With open models, you can:

- Deploy the model inside your own infrastructure

- Control exactly where data flows

- Fine-tune behavior for specific workflows

- Build customizable and conversational agents tailored to your needs

- Meet strict internal governance requirements

This makes a world of difference in regulated environments or companies with strong engineering teams. When legal or security teams ask uncomfortable questions about data handling, open-source setups often make those conversations easier.

But with great open-source models… comes great responsibility.

You own:

- Hosting and scaling

- Monitoring and evaluation

- Updates and improvements

- Performance tuning over time

If you don’t have the resources to maintain this properly, results can degrade quickly.

Now… here’s what closed LLMs offer

Closed or proprietary LLMs optimize for speed and convenience.

They typically provide:

- Managed infrastructure

- Fast iteration cycles

- Strong default performance

- Minimal setup effort

- State-of-the-art performance out of the box

For many B2B teams, this is the fastest path to value. You can test, deploy, and scale without becoming an AI operations team overnight.

The trade-off is control. You rely on the vendor’s policies, pricing changes, and roadmap. Data handling is governed by contracts and configurations rather than full ownership.

For teams that prioritize execution speed, this is often an acceptable compromise.

Why many B2B teams go hybrid

In real-world deployments, pure strategies and use-cases are very rare.

Many companies:

- Use proprietary LLMs for experimentation and general workflows

- Deploy open-source models for sensitive or regulated use cases

- Consume LLMs indirectly through tools that abstract these choices away

This hybrid approach allows teams to balance speed and control. It also reduces risk. If one model or vendor becomes unsuitable, the system does not collapse. Additionally, hybrid strategies enable teams to incorporate generative AI capabilities from both open and closed models, enhancing flexibility and innovation.

A simple decision framework

If you are deciding between open-source and closed LLMs, start here:

- Early-stage or lean teams:

Closed models are usually the right choice. Speed matters more than control. - Mid-sized teams with growing data maturity:

A mix often works best. Use managed models for general tasks and explore open options where governance matters. - Large enterprises or regulated industries:

Open-source models or tightly governed deployments become more attractive. - Teams with specific requirements:

Customizable models allow you to fine-tune large language models for your use case, industry, or domain, improving performance and relevance.

The goal is NOT to pick a side. The goal is to CHOOSE what supports your workflows without creating unnecessary operational drag.

Choosing the right LLM for your GTM stack

This is where most LLM discussions break down with looouuuud thuds.

Teams spend weeks debating models, only to realize later that the model was never the bottleneck… the bottleneck was everything around it.

When choosing the right LLM for your GTM stack, understanding the LLM development process can help teams make more informed decisions about which model best fits their needs.

I’ve seen GTM teams plug really useful LLMs into their stack and still walk away… frustrated. Not because the model was weak… but because it was operating all by itself. No shared context, clean signals, or agreement on what ‘good’ even looks like.

Here’s why model quality alone does not fix GTM problems

Most GTM workflows resemble toddlers eating by themselves… well-intentioned, wildly messy, and in need of supervision.

Your data lives across:

- CRM systems

- Ad platforms

- Website analytics

- Product usage tools

- Intent and enrichment providers

LLMs process natural-language inputs from sources such as CRM, analytics, and other tools, but often only see fragments rather than complete journeys. They can summarize what they see, but they cannot infer what was never shown.

This is why teams say things like:

- The insight sounds right, but I cannot act on it

- The summary misses what sales actually cares about

- The recommendation does not align with how our funnel works

The issue is not intelligence. It is missing context.

What actually makes LLMs useful for GTM teams

In practice, LLMs become valuable when three things are already in place. The effectiveness of an LLM for GTM teams also depends on its context window, which determines how much information the model can consider at once. A larger context window allows the model to process longer documents or more complex data, improving its ability to deliver relevant insights.

- Clean data

If your CRM stages are inconsistent or your account records are outdated, the model will amplify that confusion. Clean inputs do not mean perfect data, but they do mean data that follows shared rules.

- Cross-channel visibility

GTM decisions rarely depend on one signal. They depend on patterns across ads, website behavior, sales activity, and product usage. LLMs work best when they can reason across these signals instead of reacting to one slice of the story.

- Contextual signals

Numbers alone don’t tell the full story. Context comes from sequences, timing, and intent. An account that visited three times after a demo request means something very different from one that bounced once from a blog post. LLMs need that narrative layer to reason correctly.

Why embedding LLMs inside GTM platforms changes everything

This is where many teams breathe a sigh of relief and FINALLLY see results.

When LLMs are embedded inside GTM and analytics platforms, they inherit:

- Structured data

- Defined business logic

- Permissioned access

- Consistent context across teams

Instead of guessing, the model works with known signals and rules. Outputs become more explainable… recommendations become easier to trust… and teams stop arguing about whether the insight is real and start acting on it.

(This is also where LLMs move from novelty to infrastructure.)

Where Factors.ai fits into this picture

Tools like Factors.ai approach LLMs differently from generic AI wrappers.

The focus is not on exposing a chat interface or swapping one model for another. The focus is on building a signal-driven system where LLMs can reason over:

- Account journeys

- Intent signals

- CRM activity

- Ad interactions

- Funnel movement

In this setup, LLMs are not asked to invent insights, they are asked to interpret what’s actually going on (AKA the reality).

Now, this distinction matters A LOT because it is the difference between an assistant that sounds confident and one that actually helps teams make better decisions.

How to think about LLM choice inside your GTM stack

If you are evaluating LLMs for GTM, start with these questions:

- Do we have connected, trustworthy data?

- Can the model see full account journeys?

- Are outputs grounded in real signals?

- Can teams trace recommendations back to source activity?

If the answer to these is no, switching models will NOT fix the problem. Instead, focus on building the right system around the model.

Where LLMs fall short (and why context still wins)

Once LLMs move beyond demos and into daily use, teams start noticing patterns that are hard to ignore.

The outputs sound confident… language is fluent… and reasoning feels plausible.

BUT something still feels off.

One key limitation is that LLMs' problem solving abilities are constrained by the quality and completeness of the context provided. Without sufficient or accurate context, their advanced reasoning and step-by-step problem solving can fall short, especially for complex tasks.

This section exists because most LLM comparison articles stop right before this point. But for B2B teams, this is where trust is won or lost.

- Hallucinations and confidence without grounding

The most visible limitation is hallucination. But the issue is not ONLY that models get things wrong.

It is that they get things wrong confidently. (*let’s out HUGE sigh*)

In GTM and analytics workflows, this shows up as:

- Explanations that ignore recent pipeline changes

- Recommendations based on outdated assumptions

- Summaries that smooth over important exceptions

- Confident answers to questions that should have been flagged as incomplete

Hallucinations can also erode trust in the model's advanced reasoning abilities… making users question whether the LLM can reliably perform complex, multi-step problem-solving.

In isolation, these mistakes are easy to miss. At scale, they erode trust. Teams stop acting on insights because they are never quite sure whether the output reflects reality or pattern-matching.

- Lack of real-time business context

Most LLMs do not have direct access to live business systems by default.

They do not know:

- Which accounts just moved stages

- Which campaigns were paused this week

- Which deals reopened after going quiet

- Which product events matter more internally

Without this context, the model reasons over snapshots or partial inputs. That is fine for general explanations, but it breaks down when decisions depend on timing, sequence, and recency.

This is why teams often say the model sounds smart but feels… behind.

- Inconsistent outputs across teams

Another big problem is inconsistency.

Two people ask similar questions.

They get slightly different answers.

But both sound reasonable and correct.

In B2B environments, this creates friction. Sales, marketing, and leadership need shared understanding. When AI outputs vary too much, teams spend time debating the answer instead of acting on it.

Now, I’m not saying consistency is not about forcing identical language, but it IS about anchoring outputs to shared logic and shared data.

Why decision-makers still hesitate to trust AI outputs

At the leadership level, the question is never, “Is the model intelligent?”

It is:

- Can I explain this insight to someone else?

- Can I trace it back to real activity?

- Can I justify acting on it if it turns out wrong?

LLMs struggle when they cannot show their work. Decision-makers are comfortable with imperfect data if it is explainable. They are uncomfortable with polished answers that feel opaque.

This is where many AI initiatives stall. Not because the technology failed, but because trust was never fully earned.

The Future of LLMs in B2B Decision-Making

The most important shift around LLMs is not about bigger models or better benchmarks.

It is about where they live and what they are allowed to do.

Generative language models are at the core of this evolution, enabling LLMs to move beyond simple answer engines. In B2B, the future of LLMs includes the development of next-generation AI assistants with more advanced, assistant-like capabilities. These models are becoming decision copilots that operate inside real systems, with real constraints.

- From answers to decisions

Early LLM use focused on responses… you ask a question… and get an answer.

That works for exploration, but does not scale for execution.

The next phase is about:

- Recommending next actions

- Explaining trade-offs

- Flagging risk and opportunity

- Summarizing complex situations for faster decisions

To truly support complex business decisions, LLMs will need to enable advanced problem solving, handling multi-step tasks and detailed reasoning across various domains.

This only works when LLMs understand business context, not just language. The models are already capable, and the systems around them are catching up.

- Agentic workflows and advanced reasoning tasks tied to real data

Another visible shift is the rise of agentic workflows.

Instead of one-off prompts, teams are building systems where LLMs:

- Monitor signals continuously

- Trigger actions based on conditions

- Coordinate across tools

- Update outputs as new data arrives

These agentic workflows often involve customizable and conversable agents that can interact dynamically with business systems.

In GTM environments, this looks like agents that watch account behavior, interpret changes, and surface insights before humans ask for them.

The key difference is grounding. These agents are not reasoning in a vacuum… they are tied to live data, defined rules, and permissioned access.

- Fewer standalone chats (and more embedded intelligence)

Standalone chat interfaces are useful for learning. They are less useful for running a business.

The real future of LLMs in B2B is ‘embedded intelligence’ (oohh that’s a fancy word, isn’t it?!). But what I’m saying is… models sit inside:

- Dashboards

- Workflows

- CRM views

- Analytics reports

- Planning tools

LLMs can also assist with software development tasks within business platforms, automating coding, debugging, and streamlining development workflows.

In this case, the user does not think about which model is running. They care about whether the insight helps them act faster and with more confidence.

This shift also explains why many B2B teams will never consciously choose an LLM. They will choose platforms that have already made those decisions well.

Here’s what B2B leaders should prioritize next

If you are responsible for GTM, analytics, or revenue systems, the priorities are becoming clearer.

Focus on:

- Connecting first-party data across systems

- Defining shared business logic

- Making signals explainable

- Embedding LLMs where decisions already happen

Leaders should also consider the scalability and deployment of large scale AI models to support business growth.

Model selection still matters, but it is no longer the main lever. Context, integration, and trust are.

Teams that get this right will spend less time debating insights and more time acting on them.

FAQs for LLM Comparison

Q. What is the best LLM for B2B teams?

There is no single best option. The right choice depends on your data maturity, compliance needs, and how deeply the model is embedded into workflows. Many B2B teams use more than one model, directly or indirectly, through tools.

Q. How do LLM rankings differ for enterprises vs individuals?

Individual rankings often prioritize creativity or raw intelligence. Enterprise rankings prioritize consistency, governance, integration, and cost predictability. What works well for personal use can break down in production.

Q. Are open-source LLMs safe for enterprise use?

They can be, when deployed and governed correctly. Open-source models offer control and transparency, but they also require operational ownership. Safety depends more on implementation than on licensing.

Q. Which LLM is best for analytics and data analysis?

Models that handle structured reasoning and long context tend to perform better for analytics. Large language models (LLMs) are built on advanced neural networks, which enable their strong performance in analytics and data analysis.The bigger factor is access to clean, connected data. Without that, even strong models produce shallow insights.

Q. How do companies actually use LLMs in GTM and marketing?

Most companies use LLMs for interpretation rather than creation. However, LLMs can also generate code based on natural language input, enabling automation of marketing and GTM workflows. Common use cases include summarizing account activity, explaining funnel changes, prioritizing outreach, and supporting decision-making across teams.

Q. Do B2B teams need to choose one LLM or multiple?

Most teams end up using multiple models, often without realizing it. Different tools in the stack may rely on different LLMs, especially when addressing needs across multiple domains.

A hybrid approach reduces dependency and increases flexibility.

Q. How important is data quality when using LLMs?

It is foundational. LLMs amplify whatever data they are given. Clean, connected data leads to useful insights. Fragmented data leads to confident but shallow outputs.

See how Factors can 2x your ROI

Boost your LinkedIn ROI in no time using data-driven insights

See Factors in action.

Schedule a personalized demo or sign up to get started for free

LinkedIn Marketing Partner

GDPR & SOC2 Type II

.svg)

%20Work.%20And%20What%20Marketers%20Should%20Actually%20Know.jpg)