How Large Language Models (LLMs) Work. And What Marketers Should Actually Know

Learn how Large Language Models function and discover practical LLM use cases. From sentiment analysis to "digital twins", that will turn your AI from a toy into a teammate.

Imagine you’re at your desk, coffee in hand, staring at a blank content brief that’s due in 30 minutes (we’ve all been there). You open up a Large Language Model (LLM) like ChatGPT or Claude, and bam, you get a usable first draft.

It feels like magic, doesn't it? Spoiler alert: It’s not.

There’s solid math, smart engineering, and (surprise!) human psychology under the hood. Understanding how LLMs work isn’t just nerd talk; it’s how you get reliable results when you ask for that perfect paragraph or a catchy ad headline.

In this article, I’m breaking down the complex, geeky, and technical process into a friendly, usable blog. Ready? Let’s go.

TL;DR

- LLMs predict, they don't "think": These models are statistical engines that guess the most likely next piece of a sentence based on patterns they learned from massive amounts of data.

- The "secret sauce" is context: Using Transformers and Self-Attention, LLMs can analyze every word in your prompt at once to understand the specific meaning behind your request.

- Prompting is the new coding: To get high-quality results, you need a structured framework like COSTAR, providing context, objectives, and clear constraints rather than just generic "write a blog" commands.

- Marketers are the orchestrators: While the AI handles the heavy lifting of data analysis and drafting, humans remain essential for the strategic nuance, fact-checking, and final brand "soul".

Why understanding ‘how LLMs work’ actually matters

For us marketers, understanding the "how" isn't about becoming a data scientist (thank goodness, because I still struggle with advanced Excel formulas). It’s about predictability and control.

When you understand the mechanics, you stop treating LLMs like magic and start treating them like a highly sophisticated statistical engine. This shift helps you:

- Debug bad outputs: Instead of getting frustrated when a prompt fails, you’ll know exactly which "lever" to pull to fix it.

- Scale your creativity: You’ll find ways to automate the boring stuff (like content repurposing) while keeping the human "soul" in your brand.

- Future-proof your career: In 2026, the best marketers aren't the ones who write the fastest; they’re the ones who orchestrate the best AI workflows.

And before you ask the next question... Will AI take my job? No, they won’t. Please read more about this in the article "Will AI replace marketers?"

So…what is an LLM, anyway?

So, a Large Language Model (LLM) is a type of artificial intelligence trained on massive amounts of text data (books, articles, websites) to predict the next word in a sequence, but because it’s learned patterns at scale, it can generate coherent responses, answer questions, translate languages, summarize content, and more.

Imagine the autocomplete on your phone. You type "How are," and it suggests "you." An LLM does the same thing, but it has read roughly 10% of the entire internet to do it. It doesn't "know" facts the way a human does; it calculates the statistical probability of which word (or part of a word) should come next based on the patterns it learned during training.

The term “large” refers to two things:

- Lots of data it learned from, and

- Lots of parameters, like the internal knobs the model uses to make decisions about language.

How does an LLM actually work?

When you type a prompt into an LLM, it doesn't just "think" and reply. It goes through a very specific, multi-step assembly line.

Step 1: The training phase

Training an LLM involves feeding it text so it can learn language patterns. These models use an architecture called a transformer, with an attention mechanism that helps the model figure out which words matter most in a sentence, no matter where they are.

Step 2:Tokenization (The shredder)

The model can’t read "sentences." It breaks your text into smaller chunks called tokens. A token can be a whole word, a prefix like ‘un-’, or even just a few letters.

Fun fact: This is why LLMs sometimes struggle with spelling words backwards; they see the "token" as a single unit, not a collection of individual letters.

Step 3: Embeddings (The map)

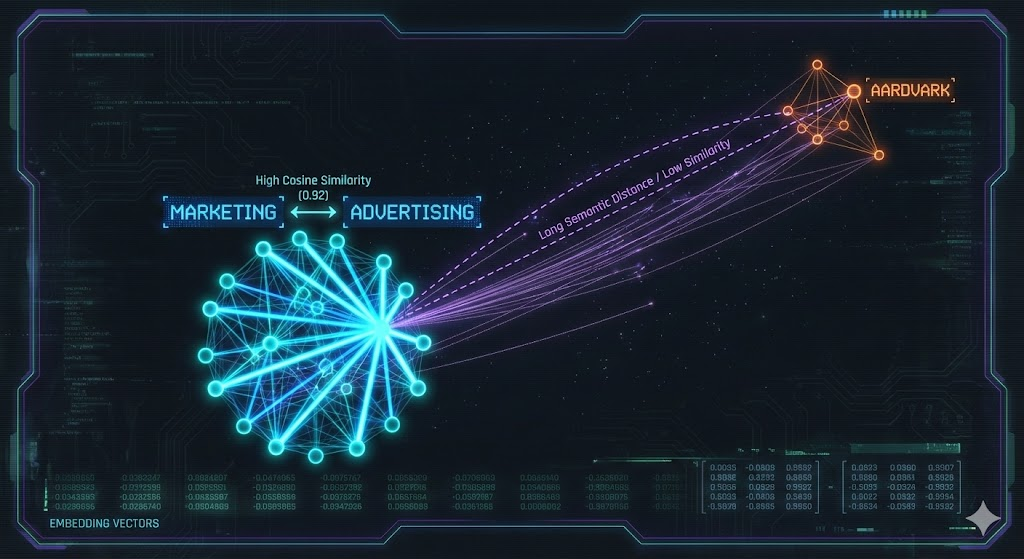

Each token is turned into a list of numbers called an embedding. These numbers act like coordinates on a massive, multi-dimensional map. Words with similar meanings (like "marketing" and "advertising") are placed close together on this map, while unrelated words (like "marketing" and "elephant") are miles apart.

Step 4: The transformer & self-attention (The context king)

This is the "secret sauce." Most modern LLMs use a Transformer architecture. The "Self-Attention" mechanism allows the model to look at every word in your prompt simultaneously and decide which ones are most important for the context.

For example, if you say "The bank was closed because of the flood," the model knows you mean a river bank, not a place where you keep your money, because it pays attention to the word "flood".

Step 5: The prediction

Finally, the model looks at all that context and predicts the next token. It doesn't just pick one; it creates a list of likely candidates with percentages attached.

"B2B marketing is..."

- ...crucial (40%)

- ...evolving (30%)

- ...hard (10%)

It picks one (usually the most likely, but sometimes a slightly "random" one to stay creative) and repeats the process until the answer is done.

Step 6: Prompting (This is where we come in)

Your prompt acts like instructions for the model; the clearer you make them, the better the output will be. LLMs don’t inherently understand goals; they follow patterns you specify. So instead of “write a blog,” you get better results with “write a 600-word blog about X with subtitles and examples.”

In simple terms, think of it like digital clay; you’re the one who has to mold it into something useful.

Popular LLM Tools that marketers can use today

Now that we’ve got the science sorted, let’s talk shop.

Different LLMs are best at different things. If you only use one tool, you’re like a chef with only a microwave. Sure, you can make dinner, but it won't be a masterpiece.

Here is the "dream team" of tools that B2B marketers are actually using:

The "Big Three":

- ChatGPT (OpenAI): Now powered by GPT-5.1, it is surprisingly flexible for everything from brainstorming LinkedIn posts to analyzing a screenshot of your funnel to find where you're losing users.

- Claude (Anthropic): Claude feels more "human" and is the gold standard for technical accuracy and clean, well-documented code. It uses a feature called Artifacts to let you build interactive interfaces or documents right in the sidebar.

- Gemini (Google): It lives inside your Google Docs and Sheets, making it the best choice for teams who need real-time search data to validate their content.

The Specialists:

- Perplexity: Think of it as a search engine that talks back. It is essential for product discovery and research because it cites its sources as it goes, no more wondering if the AI just made up a statistic.

- Jasper: Built specifically for high-volume marketing teams. It can learn your specific Brand Voice by scanning your website, ensuring your blog posts actually sound like you and not a generic robot.

- Surfer SEO: The Search General. It doesn't just write; it uses NLP (Natural Language Processing) to tell you exactly which keywords and headings you need to outrank your competitors.

The "Wait, AI does that?" tools

- Clay: It allows you to build custom ICP filters and enrichment workflows that turn a static list into a living, breathing lead engine.

- Synthesia: It lets you produce high-quality videos without a camera or crew, making it perfect for scaling personalized sales demos.

- ElevenLabs: Need to turn a blog post into a podcast? It generates natural, studio-quality audio in seconds.

- Zapier AI Agents: You describe a workflow (like "summarize new leads in Slack"), and it builds the automation for you, connecting tools that never used to speak the same language.

Looking for more alternatives to your Clay tool? Read this blog on Clay alternatives for GTM teams to know more.

LLM use cases for marketers: What can you do with LLMs?

If you’re only using LLMs to "write a blog post about SEO," you’re using the sharpest knife from Japan to open a bag of chips. It’ll get the job done, sure, but you’re missing out on its capabilities. In 2026, the coolest B2B teams are using these models for tasks that would have taken a human team weeks to finish.

Here’s how B2B teams are actually using them in 2026:

- The "Vibe Check" at Scale (Sentiment Analysis): Imagine feeding 500 G2 reviews or 1,000 Slack community messages into an LLM. Instead of reading them one by one (ouch), you ask the model to "Identify the top three things people hate about our onboarding". It acts like a high-speed detective, spotting patterns in seconds that a human might miss after their third cup of coffee.

- The "Digital Twin" (Synthetic Personas): Ever wish you could interview your ICP (Ideal Customer Profile) at 2 AM? You can. Create a synthetic persona by giving the LLM your customer data. Ask it: "You are a CTO at a mid-market SaaS company. What part of this landing page makes you want to close the tab?" (Warning: It might be brutally honest).

- The Content Shape-Shifter (Intelligent Repurposing): Don't just copy and paste. Give the LLM a 45-minute webinar transcript and tell it to "Extract five spicy takes for LinkedIn, three 'how-to' points for a newsletter, and one executive summary for a C-suite email". It’s like having a content chef who can turn one giant turkey into a seven-course meal.

- "Spy vs. Spy" (Sales Enablement): Feed the model your competitor's latest feature announcement. Ask it to "Generate a 'Battle Card' for our sales team, highlighting exactly where our product still wins". It turns dry technical updates into ammunition for your next discovery call.

- The Anti-Groupthink Partner: Stuck in a creative rut? Ask the LLM to "Give me 10 marketing campaign ideas for a cloud security product, but make them themed around 1920s noir detective novels". Most will be weird, but one might just be the creative spark you needed to stand out in a sea of corporate blue.

Now that we know what these models can do, let's talk about the "control" you use to drive them.

Master the prompt: The marketer’s "code."

Ever prompted ChatGPT for a "blog post" and received something that read like a toaster's instruction manual?

We’ve all been there, staring at a screen, wondering why the magic feels so... beige.

To get those high-tier, "wow-I-can-actually-use-this" outputs, you need to move past the "Hey AI, write an SEO blog" stage. You need a framework.

The COSTAR framework

- C - Context: Who are we and what’s the backstory?. If you don’t tell the LLM you’re a scrappy B2B fintech startup, it might assume you’re a 100-year-old insurance firm (and write like one).

- O - Objective: What is the actual mission?. Instead of "write an email," try "Write an email to re-engage leads who ghosted us after the demo".

- S - Style: What's the vibe?. Do you want "High-energy startup" or "Trusted industry veteran"? (Pick one, or it might try to be both, which is just awkward) .

- T - Tone: This is the emotional quality. For a budget-related email, you’d want to be empathetic to their constraints, not sounding like a pushy car salesman.

- A - Audience: Who are we talking to?. Writing for an Operations Manager is a world away from writing for a Gen Z TikTok creator. Use the language they actually speak.

- R - Response: What should the final product look like?. Tell it to "Use bullet points and keep it under 150 words" so you don’t get a sprawling essay you have to hack apart later.

Pro-Tip: Treat the LLM Like a Junior Intern. Stop thinking of the LLM as an all-knowing God and start treating it like a very smart, very literal junior intern. If you wouldn't give a vague instruction to a human intern, don't give it to the LLM.

Few-Shot Prompting: This is just a fancy way of saying "Give it examples". Show it a paragraph you actually like, and say, "Write like this".

The Second Draft: Don't be afraid to give feedback! If the first version is too "corporate," tell it: "This is great, but make it 20% punchier and remove the word 'leverage'".

The community POV ( What you all loveee..AKA: Reddit)

I decided to "scrape" (mentally, mostly) what the community is actually saying about all this. On subreddits like r/DigitalMarketing and r/PromptEngineering, these things are clear:

- Prompt Engineering is becoming "Workflow Engineering": Redditors are moving away from single prompts and toward building "chains" of actions. So, this might be a better time to master prompt engineering to get “Wow, I can use these kinds of results.”

- The "Human-in-the-Loop" is non-negotiable: The general consensus? AI is great at the first 80%, but that last 20% (the fact-checking, the specific brand wit, the strategic nuance) still requires a human brain. So, again, for the last time, here is your answer to the 1B$ question: AI won’t replace marketers.

- Specialization is key: General models are great, but the real "gold" lies in small, specialized models trained on industry-specific data. So, it is time to build your own MCPs.

Don't just use LLMs; understand them

The "black box" of AI feels a lot less like a spooky mystery once you realize it’s just a glorified pattern-matching machine on speed. (It doesn’t “know” things, it’s just very good at sounding like it does.)

By getting cozy with tokens, transformers, and the art of structured prompts, you’re doing something big. You’re moving from being a passive observer to an active orchestrator of your marketing engine.

Because at the end of the day, the LLM isn't the marketer, you are. It doesn't have your gut instinct, your specific brand wit, or your deep understanding of why your customers actually buy.

It’s simply the most powerful pen you’ve ever held. It’s time to stop poking the box and start driving the machine. Now, go write something legendary.

FAQs on how LLMs work

Q1. Will LLMs eventually replace my entire marketing team?

No. (Breathe a sigh of relief).

It won't replace marketers, but it will absolutely replace marketers who refuse to use it. LLMs are incredible at the first 80%, the research, the drafting, the data-crunching, but they lack the "soul". They don’t have your gut instinct, your specific brand wit, or that weirdly specific understanding of why your customers actually buy. You are the orchestrator; the AI is just the (very fast) violin.

Q2. If an LLM doesn't actually 'know' things, how can I trust it?

You shouldn't, at least not blindly! (Psst! This is why fact-checking is still in your job description.)

Remember, an LLM is a statistical engine, not a database of facts. It calculates the probability of the next word. If you ask it for an obscure statistic, it might "hallucinate" a number that sounds right but is total fiction. Always treat its output like a first draft from a very confident, very sleep-deprived intern.

Q3. What’s the secret to making my AI-written content not look like... well, AI?

Stop giving it boring instructions! If you ask for a "blog post on SEO," you’re going to get "In the ever-evolving landscape of digital marketing..." (cringe). Use the COSTAR framework to give it a personality. Tell it to "be punchy," "avoid corporate jargon," or "write like a witty professor". Better yet, use Few-Shot Prompting: show it a paragraph you’ve actually written and tell it, "Copy this vibe".

Q4. Is it better to use one 'big' LLM or a bunch of small ones?

In 2026, the trend is moving toward specialization. While the "Big Three" (ChatGPT, Claude, Gemini) are great for general tasks, the real gold lies in specialized tools trained on specific data. For example, use Surfer SEO for search optimization or Jasper for keeping your brand voice consistent at scale. It’s about building a "workflow" where each tool handles what it’s best at, rather than asking one bot to do everything.

Q5. What is a 'token' and why should I care?

Think of tokens as the currency of AI. The model doesn't read words; it shreds them into chunks called tokens. This matters to you because most LLMs have a "context window”, a limit on how many tokens they can "remember" at one time. If you feed it a 100-page whitepaper and then ask a question about the first page, it might have already "forgotten" the beginning. Understanding tokens helps you keep your prompts concise and effective.

See how Factors can 2x your ROI

Boost your LinkedIn ROI in no time using data-driven insights

See Factors in action.

Schedule a personalized demo or sign up to get started for free

LinkedIn Marketing Partner

GDPR & SOC2 Type II

.svg)